In short

- Mistral Medium 3 rivals Claude 3.7 and Gemini 2.0 at one-eighth the fee, focusing on enterprise AI at scale.

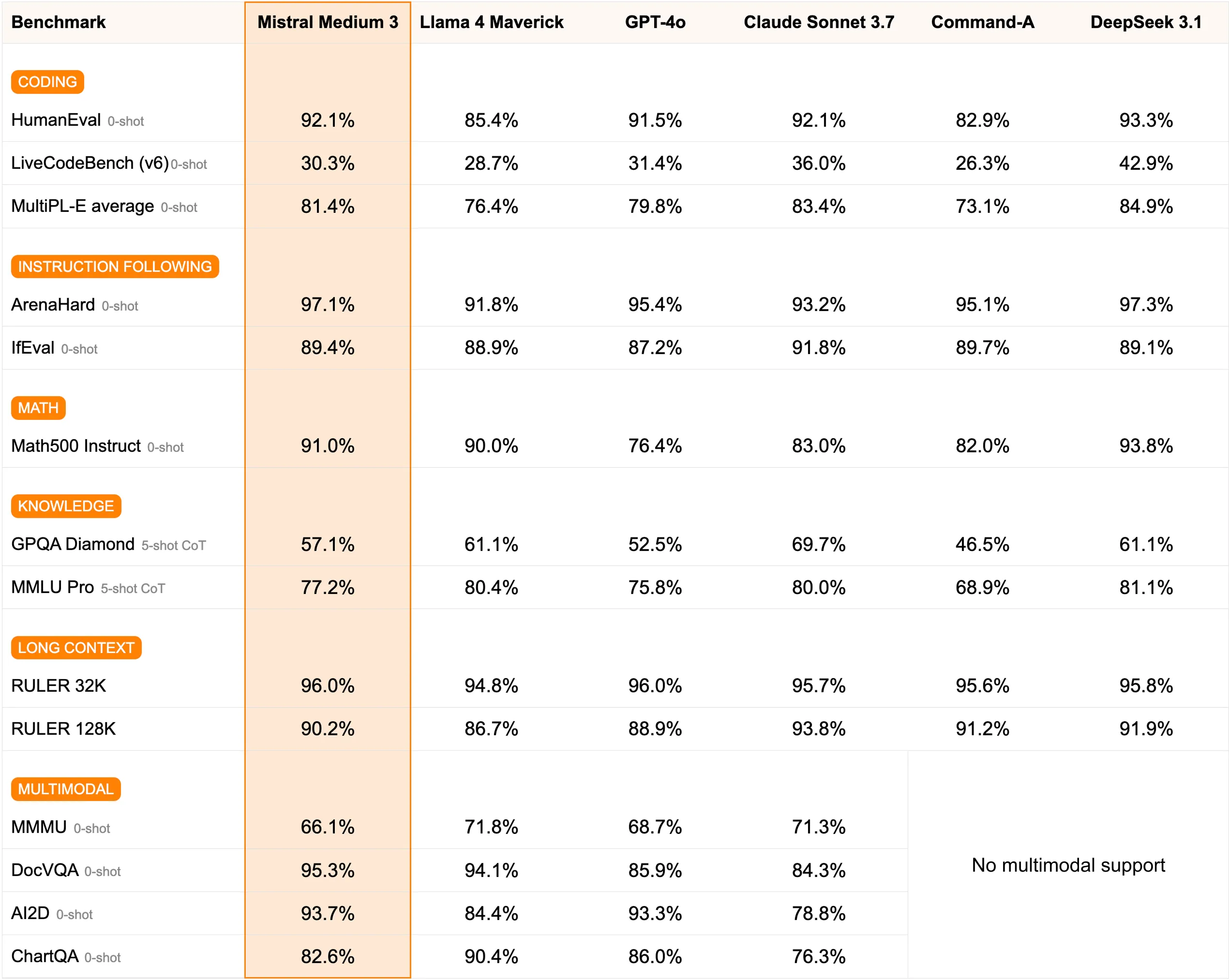

- The mannequin excels in coding and enterprise functions, outperforming Llama 4 Maverick and Cohere Command A in benchmarks.

- Now dwell on Mistral La Plateforme and Amazon Sagemaker, with Google Cloud and Azure integrations coming quickly.

Mistral Medium 3 dropped yesterday, positioning the mannequin as a direct problem to the economics of enterprise AI deployment.

The Paris-based startup, based in 2023 by former Google DeepMind and Meta AI researchers, launched what it claims delivers frontier efficiency at one-eighth the operational value of comparable fashions.

“Mistral Medium 3 delivers frontier efficiency whereas being an order of magnitude inexpensive,” the corporate mentioned.

The mannequin represents Mistral AI’s strongest proprietary providing thus far, distinguishing itself from an open-source portfolio that features Mistral 7B, Mixtral, Codestral, and Pixtral.

At $0.4 per million enter tokens and $2 per million output tokens, Medium 3 considerably undercuts rivals whereas sustaining efficiency parity. Impartial evaluations by Synthetic Evaluation positioned the mannequin “amongst the main non-reasoning fashions with Medium 3 rivalling Llama 4 Maverick, Gemini 2.0 Flash and Claude 3.7 Sonnet.”

Mistral Medium 3 unbiased evals: Mistral is again amongst the main non-reasoning fashions with Medium 3 rivalling Llama 4 Maverick, Gemini 2.0 Flash and Claude 3.7 Sonnet

Key takeaways:

➤ Intelligence: We see substantial intelligence good points throughout all 7 of our evals in contrast… pic.twitter.com/mc9il9WV8J— Synthetic Evaluation (@ArtificialAnlys) Might 8, 2025

The mannequin excels significantly in skilled domains.

Human evaluations demonstrated superior efficiency in coding duties, with Sophia Yang, a Mistral AI consultant, noting that “Mistral Medium 3 shines within the coding area and delivers a lot better efficiency, throughout the board, than a few of its a lot bigger rivals.”

Benchmark outcomes point out Medium 3 performs at or above Anthropic’s Claude Sonnet 3.7 throughout numerous take a look at classes, whereas considerably outperforming Meta’s Llama 4 Maverick and Cohere’s Command A in specialised areas like coding and reasoning.

The mannequin’s 128,000-token context window is commonplace, and its multimodality lets it course of paperwork and visible inputs throughout 40 languages.

However in contrast to the fashions that made Mistral well-known, customers won’t be able to switch it or run it regionally.

Proper now, the most effective supply for open supply fanatics is Mixtral-8x22B-v0.3, a mix of consultants mannequin that runs 8 consultants of twenty-two billion parameters every. Moreover Mixtral, the corporate has over a dozen totally different open-source fashions out there.

It’s additionally initially out there for enterprise deployment and never home utilization by way of LeChat—Mistral’s chatbot interface. Mistral AI emphasised the mannequin’s enterprise adaptation capabilities, supporting steady pretraining, full fine-tuning, and integration into company information bases for domain-specific functions.

Beta clients throughout monetary companies, vitality, and healthcare sectors are testing the mannequin for customer support enhancement, enterprise course of personalization, and complicated dataset evaluation.

The API will launch instantly on Mistral La Plateforme and Amazon Sagemaker, with a forthcoming integration deliberate for IBM WatsonX, NVIDIA NIM, Azure AI Foundry, and Google Cloud Vertex.

The announcement sparked appreciable dialogue throughout social media platforms, with AI researchers praising the cost-efficiency breakthrough whereas noting the proprietary nature as a possible limitation.

The mannequin’s closed-source standing marks a departure from Mistral’s open-weight choices, although the corporate hinted at future releases.

“With the launches of Mistral Small in March and Mistral Medium immediately, it is no secret that we’re engaged on one thing ‘massive’ over the subsequent few weeks,” Mistral’s Head of Developer Relationships Sophia Yang teased within the announcement. “With even our medium-sized mannequin being resoundingly higher than flagship open supply fashions akin to Llama 4 Maverick, we’re excited to ‘open’ up what’s to come back.”

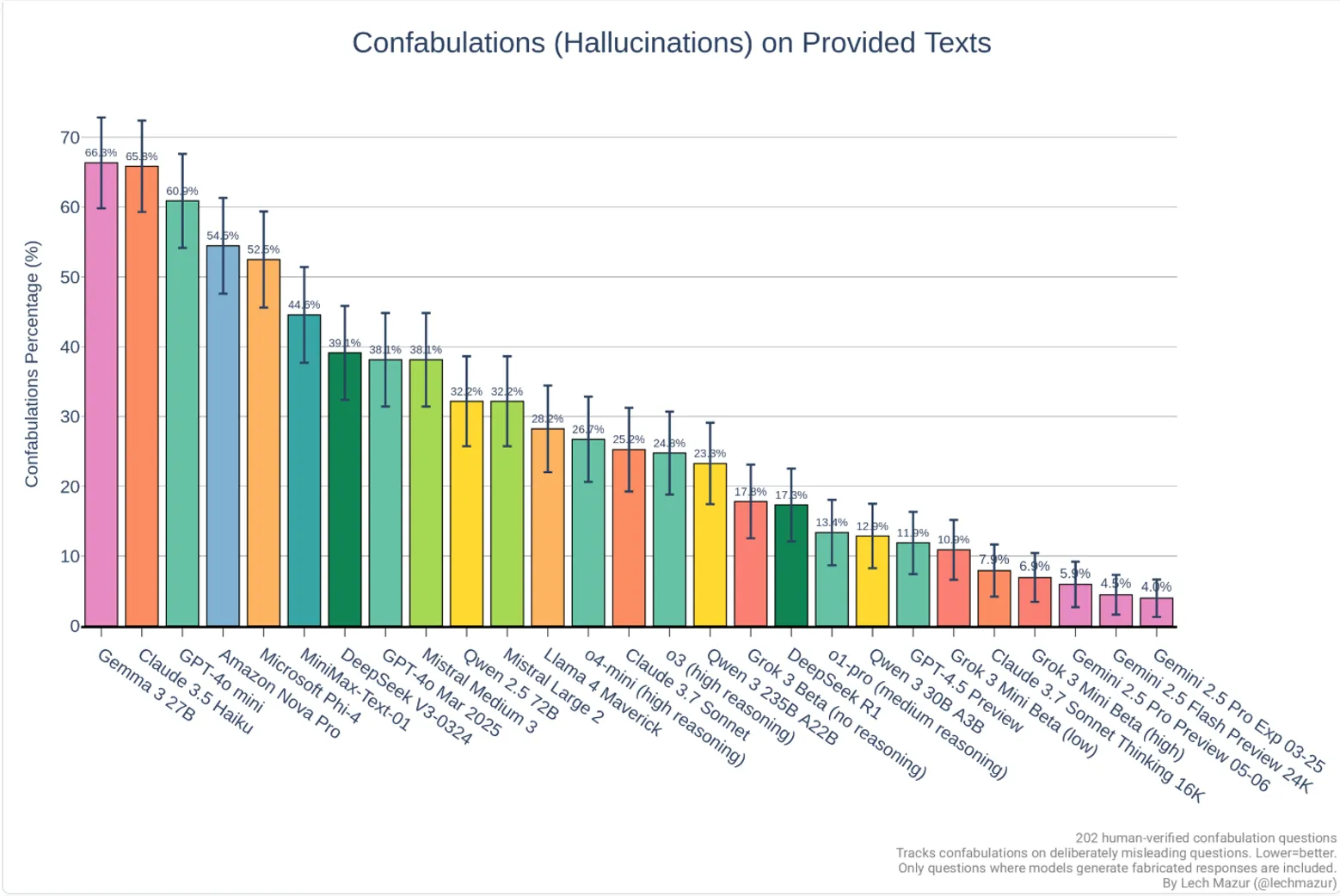

Mistral tends to hallucinate lower than the common mannequin, which is good news contemplating its dimension.

It’s higher than Meta Llama-4 Maverick, Deepseek V3 and Amazon Nova Professional, to call a couple of. Proper now, the mannequin that has the least hallucinations is Google’s just lately launched Gemini 2.5 Professional.

This launch comes amid spectacular enterprise development for the Paris-based firm regardless of being quiet because the launch of Mistral Giant 2 final 12 months.

Mistral just lately launched an enterprise model of its Le Chat chatbot that integrates with Microsoft SharePoint and Google Drive, with CEO Arthur Mensch telling Reuters they’ve “tripled (their) enterprise within the final 100 days, particularly in Europe and out of doors of the U.S.”

The corporate, now valued at $6 billion, is flexing its technological independence by working its personal compute infrastructure and decreasing reliance on U.S. cloud suppliers—a strategic transfer that resonates in Europe amid strained relations following President Trump’s tariffs on tech merchandise.

Whether or not Mistral’s declare of attaining enterprise-grade efficiency at consumer-friendly costs holds up in real-world deployment stays to be seen.

However for now, Mistral has positioned Medium 3 as a compelling center floor in an trade that usually assumes larger (and pricier) equals higher.

Edited by Josh Quittner and Sebastian Sinclair

Typically Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.